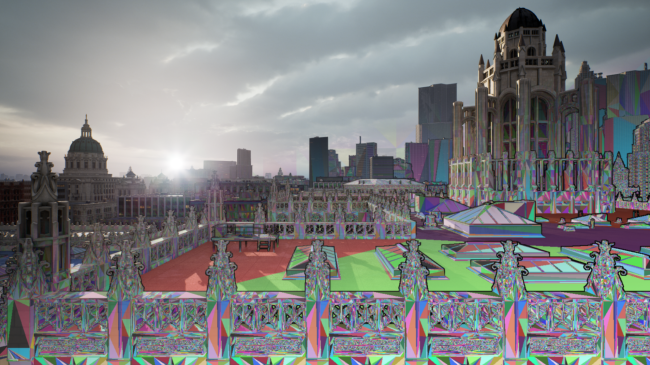

Unreal Engine 5, released in April 2022, has already revolutionized many processes in the 3D world.

All possible thanks to its key evolutions: Lumen for real-time Global Illumination, Chaos for physics and finally the main one, Nanite, for performance. In this article we will focus on the real revolution in the way of understanding geometry calculations brought by Nanite.

What are the benefits of Nanite ?

Nanite’s advances in terms of calculation will increase optimization for geometry and decrease that of textures, in the world of 3D and more specifically for 3D computer graphics artists. In this sense, Nanite breaks boundaries by making graphics more accessible and pushes the limits by transforming the quality of visual rendering while improving fluidity and efficiency.

In other words, before we were used to making ugly renderings to gain efficiency, now we can combine rendering quality and efficiency thanks to Nanite’s optimizations.

How has Nanite revolutionized the power of visual rendering?

Nanite focuses on the mesh; this is what new rendering technologies have optimized the most and at the expense of optimizing shapes and polygon counts. Polygon count is becoming less and less important in the 3D world in general, and now with Unreal Engine 5, it has become a relegated detail, whereas before it was a major challenge.

One of main’s tricks is that Nanites only shows the exact level of detail needed at the optimal resolution for the display to look great, no more, no less.

Nanite only shows the essentials ! And to achieve this, the idea was to transform the way LODs (Level of Detail) are viewed.

Clustering : Nanite strike a high level of permformance

Epic Games has based itself on the research done by Nvidia a few years ago on mesh clustering techniques to develop a new approach to optimizing calculations.

Indeed, rather than basing the calculations on the meshes themselves, the calculations are based on the division of the meshes into zones (clusters) to better understand the geometry and shape of the object, and thus make an appropriate calculation according to the display needs at a given time. Each element is divided into clusters which are themselves divided into sub-clusters (128 maximum), depending on the complexity of the shape. In addition, Nanite takes into account the number of pixels on the screen to calculate the complexity that it must display to obtain the best rendering quality.

This could be considered a LOD approach with the major difference that it is not provided by a computer graphics designer during the design of a game but automatically generated according to the topological complexity of the clusters of the geometry.

The complexity of a scene is then no longer based on the geometry or on the mesh but on the topology of the objects. Thus, the geometry and the meshes come out of the equation and become minor in the calculation.

Display and DMA : pre-calculation and instant data display

Of course, clustering implies a considerable number of computations and data transfer to the runtime and this pushes the need for machine performance to execute them.

To solve this problem, Unreal Engine uses the DMA technology developed by Microsoft and Sony. Direct Memory Access is a system that allows the GPU to have direct access to memory without having to go through the CPU, thus accelerating calculations.

Therefore, Nanite pre-computes all cluster information and stores it in memory. The GPU then fetches this information to instantly display the right clusters at the right time, faster than ever before.

With the application of these new operating methods, we consider that the major challenge for Epic Games is no longer the number of polygons but the superposition of meshes and the density of the geometry that the engine will be able to render while maintaining a display with a high frame rate per second.

To go into detail, and have a much more in-depth technical explanation, we recommend you read this article.

With the power of Nanite, what are the changes for SkyReal and its customers?

SkyReal’s customers have a real interest in the number of elements within a single model, as the industrial sector is very greedy for the number of parts and the details of each of them. Recently with UE4, Epic Games advises a limit of 10,000 items and 200 million triangles in a model when our customers’ models can reach over 300,000 items and 800 million triangles. The difference is important if SkyReal wants to provide a good user experience.

Thus, our main challenge is to figure out how to gain the performance to be able to afford loading our customers’ extremely large models without degrading the display. Different approaches are possible to answer this problem but SkyReal has decided to start by accelerating the import and conversion of 3D data. Indeed, the SkyReal teams have been working for more than a year on a platform that we call XR Center and that will profoundly change the way in which CAD data is transformed.

In order to increase the performance of the Nanite pre-calculation process, XR Center has evolved into a multi-machine data preparation system that we could call a “prep farm” (or more precisely a render farm dedicated to preparation). So we are going to multiply the computing power by having several machines work on the same data and then reassemble them afterwards; it is reminiscent of the clustering mentioned above 🤔

With Unreal Engine 5, SkyReal can integrate real-time rendering at runtime with per-pixel display and DMA integration directly into its system.

Finally, in the best of all possible worlds, the most obvious solution would be to reduce the number of parts. However, to accomplish this feat, you would need to be able to multi-instantiate the elements, which is currently very complex to implement in Unreal.

Perhaps an evolution planned on the Unreal Engine roadmap?

And if you want to dive even deeper into Nanite, here’s everything you should know about Unreal Engine 5.